Power Hungry

Silicon Valley believes more computation is essential for progress. But it ignores the resource burden and doesn’t care if the benefits materialise.

By Paris Marx

Artificial intelligence is going to upend every aspect of our society. At least, that’s what some of the leading people in the tech industry want us to believe. For the past year, they’ve been adamant that every worker will have an AI assistant, chatbots will take the place of doctors and teachers, and their products might even get to the point that they threaten our very existence. (But that shouldn’t stop us from building them.)

Now, that all sounds a bit far-fetched to me, but there’s no denying these narratives are designed to set the foundation for a much more expansive rollout of these technologies. What’s often left unsaid is the true cost of that decision. There are always people harmed in the tech industry’s commercial schemes, but what’s often less remarked u on is the material cost of the future visions they’re uniquely empowered to bring into being.

In January, OpenAI CEO Sam Altman made a rare admission of what his future entails. Speaking to Bloomberg at the World Economic Forum, he acknowledged “we still don’t appreciate the energy needs of this technology.” The amount of energy needed to power his vision for AI would require an “energy breakthrough” that he had faith (not proof) would come, and in the meantime we could rely on “geoengineering as a stopgap”.

Altman’s admission gets to something important about the trajectory of this AI boom. When we open an app or go on the web, it’s not obvious where all the data comes from. The idea of “the cloud” is designed to obscure it. But connected to the vast network of cables that surround the planet are immense warehouses stuffed with servers that require significant resources to keep running. And if our tech overlords get their way, those infrastructures will be considerably expanded in the years to come, with serious — and potentially harmful — consequences.

AI requires a lot of computing power

The cryptocurrency hype of the past few years already started to introduce people to these problems. Despite producing little to no tangible benefits — unless you count letting rich people make money off speculation and scams — Bitcoin consumed more energy and computer parts than medium-sized countries, and crypto miners were so voracious in their energy needs that they turned shuttered coal plants back on to process crypto transactions. Even after the crypto crash, Bitcoin still used more energy in 2023 than the previous year, but some miners found a new opportunity: powering the generative AI boom.

The AI tools being pushed by OpenAI, Google, and their peers are far more energy intensive than the products they aim to displace. In the days after ChatGPT’s release in late 2022, Sam Altman called its computing costs “eye-watering”. Several months later, Alphabet chairman John Hennessy told Reuters that getting a response from Google’s chatbot would “likely cost 10 times more” than using its traditional search tools. Instead of reassessing their plans, major tech companies are doubling down and planning a massive expansion of the computing infrastructure available to them.

Data centres go all the way back to early computers, but the introduction of computation as a service by Amazon Web Services (AWS) in 2006 transformed how companies approached their computing needs. Instead of buying their own server racks and eventually building their own data centres, companies new and old could turn to AWS, Microsoft Azure, or Google Cloud Platform to rent server space and subscribe to other services they might need (or be convinced they should buy) to manage their businesses. That not only further centralised computing power in the hands of a smaller number of major players; it also created some perverse incentives.

When selling computation and associated services as a business, “cloud” companies need to make everything we do more computationally intensive to create a justification for the growth of that business, which means the demands for computation must increase and, along with it, the number of data centres to facilitate it. In practice, that means more AI tools are pushed on companies that don’t need them, more data needs to be collected, and everything we encounter needs to become bloated with unnecessary features and trackers, just to give a few examples.

There isn’t necessarily a tangible benefit to increasing the demand for computation, but it’s good for the cloud business and it plays into a basic assumption, or even ideological belief, within the tech industry that more computation is equivalent to progress. The more the services we depend on can be digitised and computers can spread throughout society, the closer we’ll be to whatever tech-mediated utopia some billionaire gleamed from a science-fiction book or movie that caught their eye in their youth. That belief allows them to look past the consequences of the accelerating buildout of large data centres.

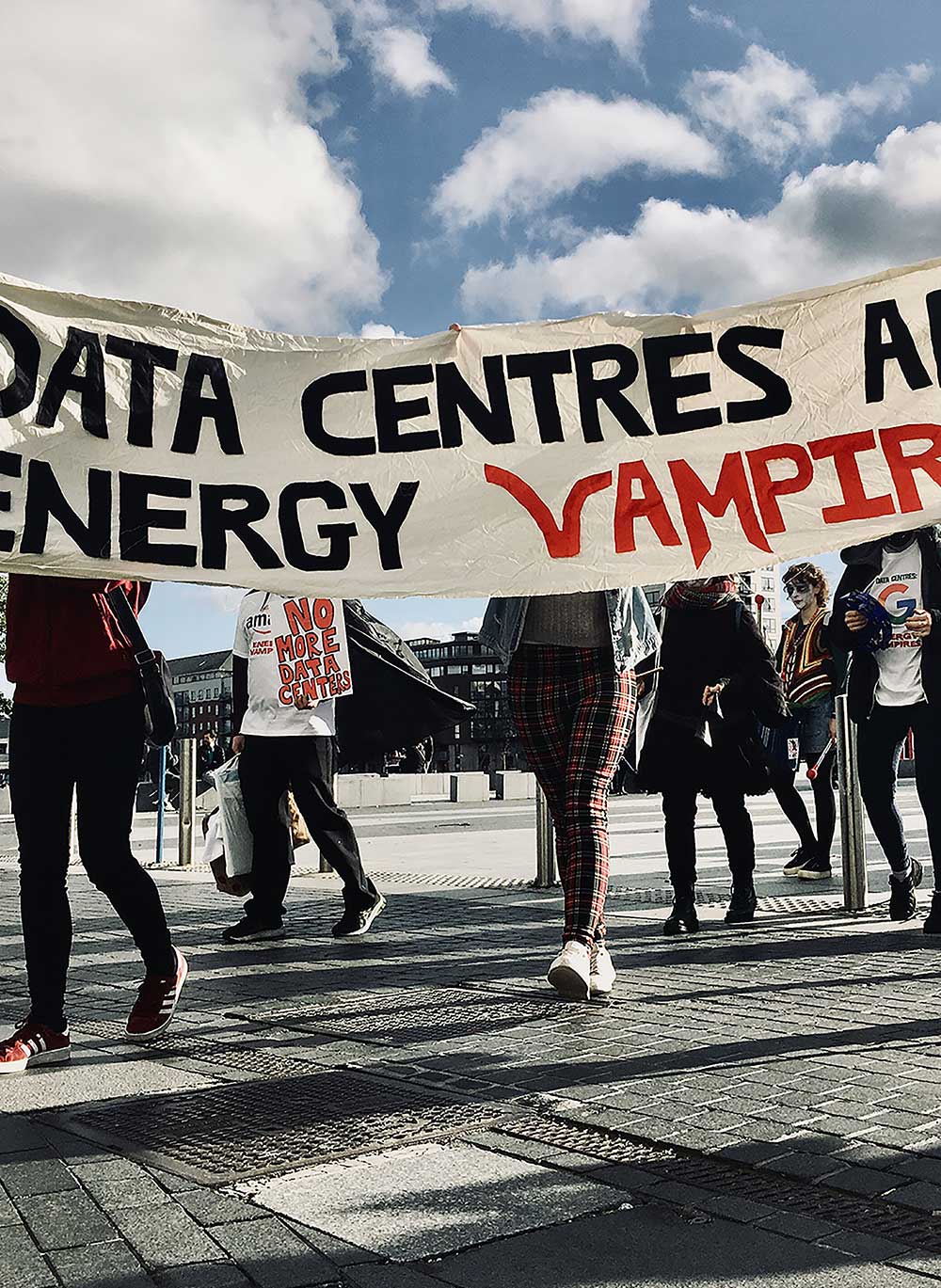

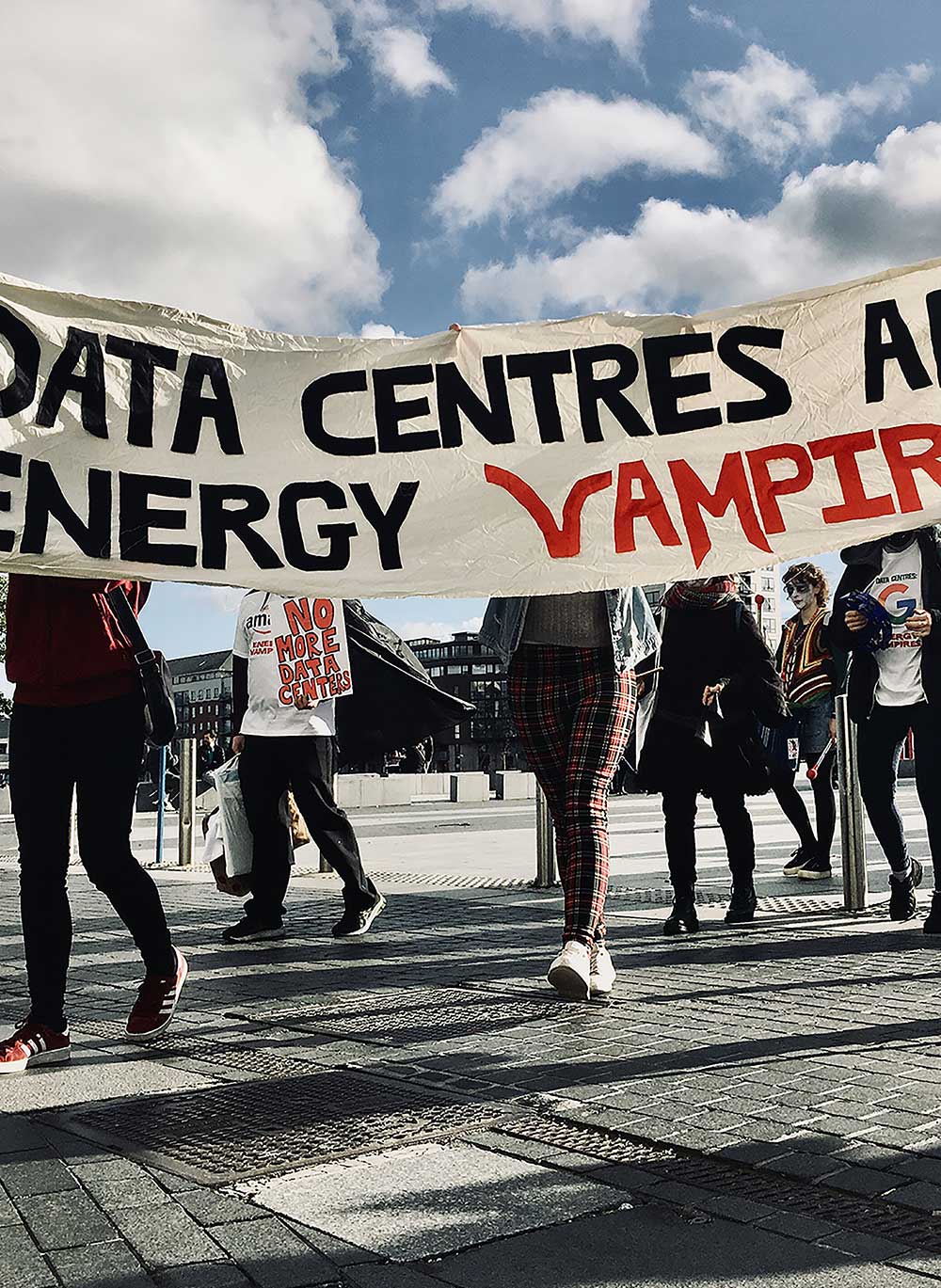

Powering the data centre boom

As the cloud took over, more computation fell into the hands of a few dominant tech companies, who made the move to what are called “hyperscale” data centres. Those facilities are usually over 10,000 square feet and hold more than 5000 servers, but those being built today are often many times larger than that. For example, Amazon says its data centres can have up to 50,000 servers each, while Microsoft has a campus of 20 data centres in Quincy, Washington with almost half a million servers between them.

By the end of 2020, Amazon, Microsoft, and Google controlled half of the 597 hyperscale data centres in the world, but what’s even more concerning is how rapidly that number is increasing. By mid-2023, the number of hyperscale data centres stood at 926, and Synergy Research estimates another 427 will be built in the coming years to keep up with the expansion of resource-intensive AI tools and other demands for increased computation. All those data centres come with an increasingly significant resource footprint.